DATA NATURE BLOG

HOW TO STOP IGNORING

FEEDBACK ON BI REPORTS

FEEDBACK ON BI REPORTS

By alex barakov

January 2023

DATA NATURE BLOG

HOW TO STOP IGNORING

FEEDBACK ON BI REPORTS

FEEDBACK ON BI REPORTS

By alex barakov

January 2023

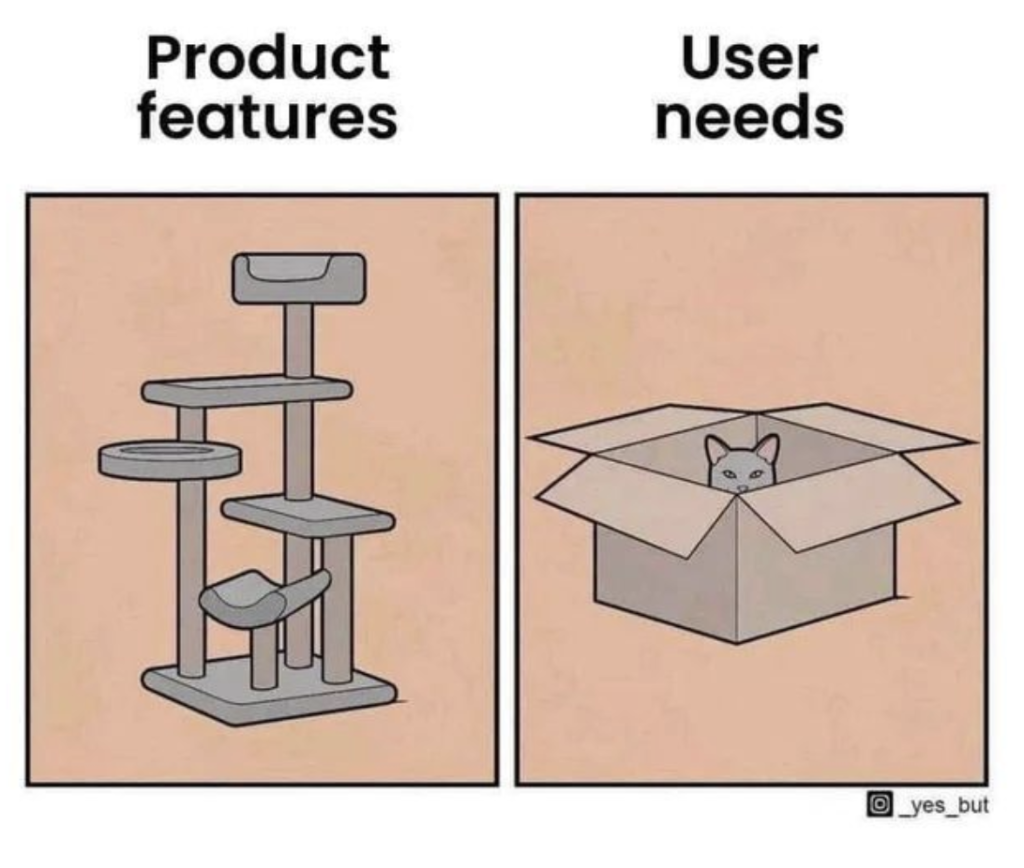

Report author bias

Data, requests, charts, requirements, more requirements, more data, in the end, release and already a new project, new report. We put in effort into dashboards during development, user acceptance testing and switch to new tasks, and then with sadness we discover the sad usage statistics.

I also noticed among BI analysts (including myself) a tendency to be defensive about our own vision of how a specific dashboard should be organized, and to dismiss user feedback as incorrect if they don't understand or appreciate its capabilities, design, etc. Individual negative feedback is often brushed off as insignificant and the report developer may not realize that the report is not being understood or accepted by users on a large scale. This is a form of cognitive bias among report developers. It's easy to say "Users always want their own Excel, what can I do?".

In summary, further reflection will focus on implementing CustDev practices in dashboarding, specifically in obtaining feedback throughout the lifecycle of analytical products, and more specifically in configuring the feedback flow to improve report adoption.

CustDev practices have proven effective in product development - any user-facing internet service, mobile application - they all put a great deal of effort into configuring user feedback flow, analyzing customer funnel from first contact to departure. For such products, any improvements in this area have a direct impact on financial results (through classic metrics like ARPPU, LTV, etc.). However, CustDev ideas are not widely implemented in BI projects, despite the fact that treating a report as a product and the user as a paying customer would be a logical approach. There seems to be a certain pride among BI teams in not wanting to adopt a product-based approach.

I also noticed among BI analysts (including myself) a tendency to be defensive about our own vision of how a specific dashboard should be organized, and to dismiss user feedback as incorrect if they don't understand or appreciate its capabilities, design, etc. Individual negative feedback is often brushed off as insignificant and the report developer may not realize that the report is not being understood or accepted by users on a large scale. This is a form of cognitive bias among report developers. It's easy to say "Users always want their own Excel, what can I do?".

In summary, further reflection will focus on implementing CustDev practices in dashboarding, specifically in obtaining feedback throughout the lifecycle of analytical products, and more specifically in configuring the feedback flow to improve report adoption.

CustDev practices have proven effective in product development - any user-facing internet service, mobile application - they all put a great deal of effort into configuring user feedback flow, analyzing customer funnel from first contact to departure. For such products, any improvements in this area have a direct impact on financial results (through classic metrics like ARPPU, LTV, etc.). However, CustDev ideas are not widely implemented in BI projects, despite the fact that treating a report as a product and the user as a paying customer would be a logical approach. There seems to be a certain pride among BI teams in not wanting to adopt a product-based approach.

History of the topic

In the system of feedback collection for BI projects, it is important to separate large-scale corporate surveys from mechanisms for continuous feedback collection (anytime/ongoing feedback) within various products and tools.

The first (1) generally collect satisfaction with the use of systems or the work of departments and teams, (2) are usually centralized and conducted 1-2 times a year. You can conduct specialized surveys for the BI system - you will get valuable information for its development, but without details on the situation in specific reports. This was described by Roman Bunin, talking about the "NPS for BI" metric.

The second are embedded in user interfaces and are aimed at obtaining a constant flow of feedback specific to this particular dashboard - about its usefulness/understandability, its useful/negative properties. It is this category that will be discussed further.

We started experimenting with a feedback tool in our BI system about 6 years ago. The original calculation was simple - we placed an icon in the report, when clicked, it would open a feedback form page. It looked like this:

The first (1) generally collect satisfaction with the use of systems or the work of departments and teams, (2) are usually centralized and conducted 1-2 times a year. You can conduct specialized surveys for the BI system - you will get valuable information for its development, but without details on the situation in specific reports. This was described by Roman Bunin, talking about the "NPS for BI" metric.

The second are embedded in user interfaces and are aimed at obtaining a constant flow of feedback specific to this particular dashboard - about its usefulness/understandability, its useful/negative properties. It is this category that will be discussed further.

We started experimenting with a feedback tool in our BI system about 6 years ago. The original calculation was simple - we placed an icon in the report, when clicked, it would open a feedback form page. It looked like this:

The form included:

- A rating (1-5 stars) for overall satisfaction,

- Additional scales, including data quality, usefulness in solving business tasks, ease of use, performance, and design quality,

- A field for a text comment.

- The problem of informing - the user didn't see the icon, didn't understand its meaning, and ultimately didn't click on it;

- The problem of simplicity - the user was intimidated by the form, which looked voluminous;

- The problem of motivation - the biggest one. The user knew about the feedback tool, didn't experience difficulties with filling out the form, but ... simply didn't want to write feedback on the report... He used it, but the report often had no critical significance and therefore did not generate a strong desire to influence it.

At that moment, we did not attach much importance to this framework - we did not make much emphasis on the feedback icon, did not promote the feedback tool. We took the result philosophically.

Museum of Design in Helsinki

Visitors are given a sticker upon exit to stick on a designated wall.

One benefit of this approach is that people enjoy "posting" their feedback, providing a positive incentive.

Minus - Counting the results requires manual labor. There is almost no space for negative feedback in the first column. Also, people do not immediately understand what is what, they pass by the middle, are lazy to return and it seems they stick in the middle)

Visitors are given a sticker upon exit to stick on a designated wall.

One benefit of this approach is that people enjoy "posting" their feedback, providing a positive incentive.

Minus - Counting the results requires manual labor. There is almost no space for negative feedback in the first column. Also, people do not immediately understand what is what, they pass by the middle, are lazy to return and it seems they stick in the middle)

Problems and solutions

Time passed and we realized that feedback was interesting to us after all.

We began to analyze it.

The lack of information can be partially solved by promotion, notifications, training, etc. This is the same everywhere.

The problem of conciseness and simplicity of form can also be solved. The example of the form from Yandex Metrics in the screenshot below seems decent. We took it as a basis and developed it further. So, when a low score was given for usefulness or convenience, an additional question was added to clarify what exactly it expresses.

The problem of motivation is the most difficult to solve.

I have seen approaches where the company organizes a 'Feedback Day' and encourages feedback in any corporate systems with various bonuses (currency of corporate loyalty program). The theme is good for popularization. It gives a single impulse but no more.

The truth is that users leave feedback when they are motivated to do so, and this motivation can be created by a good design, easy access, and the ability to see the results of their feedback. But this is a complex and long-term process that requires a lot of effort and resources

We began to analyze it.

The lack of information can be partially solved by promotion, notifications, training, etc. This is the same everywhere.

The problem of conciseness and simplicity of form can also be solved. The example of the form from Yandex Metrics in the screenshot below seems decent. We took it as a basis and developed it further. So, when a low score was given for usefulness or convenience, an additional question was added to clarify what exactly it expresses.

The problem of motivation is the most difficult to solve.

I have seen approaches where the company organizes a 'Feedback Day' and encourages feedback in any corporate systems with various bonuses (currency of corporate loyalty program). The theme is good for popularization. It gives a single impulse but no more.

The truth is that users leave feedback when they are motivated to do so, and this motivation can be created by a good design, easy access, and the ability to see the results of their feedback. But this is a complex and long-term process that requires a lot of effort and resources

Ideas

We decided to move forward and develop the theme - thus the idea of building a framework that would find those who have something to say and request their feedback specifically emerged.

It seems logical to have two scenarios:

It seems logical to have two scenarios:

- we look at which report the employee used most frequently over the month and ask him by email to give feedback on this specific report.

- we look at which report the employee used consistently for 3+ months, but stopped using it during the last month. We ask for the reason by email.

A draft of such a feedback-request email for our project in Tinkoff Bank.

In this way, we guarantee that our request will be relevant for the user, the report is understandable and meaningful.

Then we actually generate sending hundreds of custom emails to active users once a month and wait.

It should be noted that the code for forming the list of recipients and emails for sending should take into account some nuances. For example:

At present, there is no concrete data on the effectiveness of this approach. The framework is currently in the development pipeline, but it is relatively straightforward to implement and we plan to work on it this year.

It is also likely to be compatible with the next step - integrating the process with a Chatbot, which would allow for a more natural and convenient means of communication with employees via corporate messaging platforms (such as Slack or Microsoft Teams). If feedback is solicited through a bot rather than through emails, we anticipate a significant increase in response rates. In my opinion, providing feedback through the use of stars or emoticons, writing a few words about a report that is clear to you, and then returning to other tasks, would take only a matter of seconds.

Then we actually generate sending hundreds of custom emails to active users once a month and wait.

It should be noted that the code for forming the list of recipients and emails for sending should take into account some nuances. For example:

- do not send more than one email to one user per month

- do not send repeated requests for feedback on reports that the user has already provided feedback on.

At present, there is no concrete data on the effectiveness of this approach. The framework is currently in the development pipeline, but it is relatively straightforward to implement and we plan to work on it this year.

It is also likely to be compatible with the next step - integrating the process with a Chatbot, which would allow for a more natural and convenient means of communication with employees via corporate messaging platforms (such as Slack or Microsoft Teams). If feedback is solicited through a bot rather than through emails, we anticipate a significant increase in response rates. In my opinion, providing feedback through the use of stars or emoticons, writing a few words about a report that is clear to you, and then returning to other tasks, would take only a matter of seconds.

Possible conversation of FeedbackBot with a report user in MS Teams

In conclusion, I must mention a scary possibility - there's no guarantee that this approach will be successful. Even if it does take off, it's not a certainty that all of you are prepared or willing to receive such a large amount of "truth" from users and change your views on reports. As in life, each person must decide for themselves what they are comfortable believing in.

another related posts